We are interested in real-time depth sensing and spatial mapping. Essentially, 3D spatial data is fundamental in modern robotics and digital-twin applications, enabling autonomous systems to perceive, interpret, and interact with real-world environments. Computer vision, time-of-flight sensors, and simulation environment — these tools serve as the core foundation supporting our research activities.

Stereo Camera

A stereo camera is a vision system that uses two (or more) lenses placed a known distance apart to capture images from slightly different viewpoints, just like human eyes. By comparing these two perspectives, the system can perceive depth and reconstruct a 3D representation of a scene.

Point Cloud

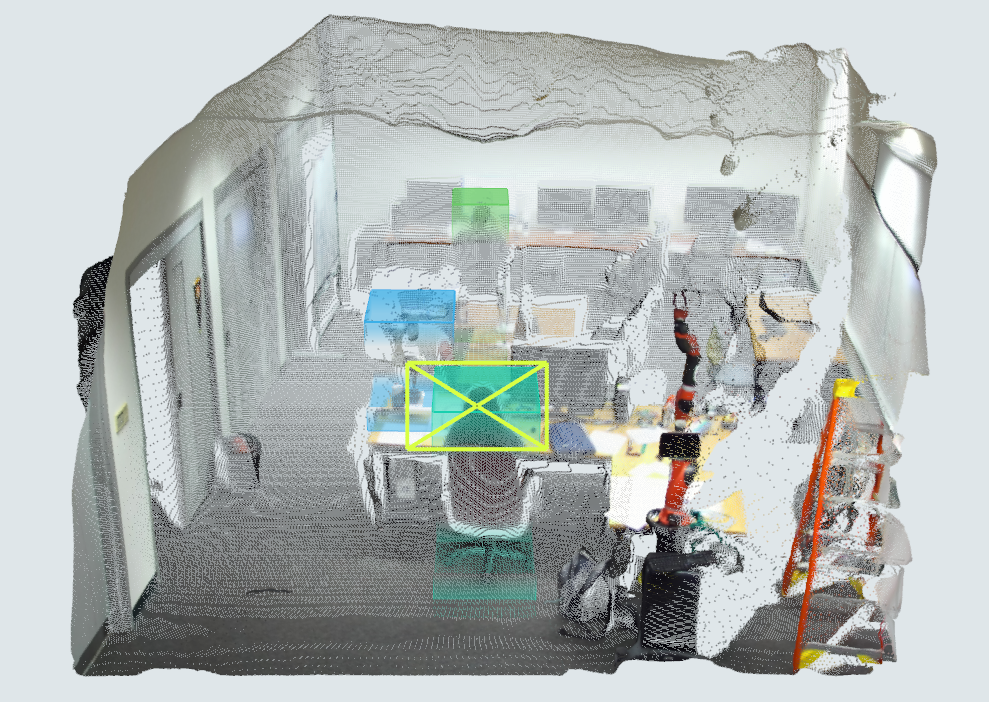

The image below presents a 3D point cloud reconstruction of our laboratory environment generated using a stereo camera. The visualization captures detailed spatial geometry, including desks, chairs, computers, and robotic equipment, forming a realistic three-dimensional map of the workspace. Color-coded bounding boxes highlight specific detected objects or regions of interest for object recognition, tracking, or obstacle mapping.

LiDAR Sensing

The team is also investigating on LiDAR (light detection and ranging) and camera data integration. Below is a simulated LiDAR point cloud captured in the lab. On the right, one can roughly see the robotic manipulator, a 30″ monitor, and a workbench (a big flat surface). On the left, 3 study desks are visible. The simulated LiDAR sensor is mounted on top of the robotic manipulator.